When it comes to data backup, one of the most debated topics is the frequency of full backups. For many users, the choice between weekly and monthly full backups comes down to balancing storage constraints, data restoration speed, and the level of data protection required. While incremental backups help reduce the load on storage, a full backup is essential to ensure a solid recovery point, independent of daily incremental changes.

In this post, we’ll explore the benefits of both weekly and monthly full backups, along with practical tips to help you choose the best backup frequency for your unique data needs.

Why Full Backups Matter

A full backup creates a complete copy of all selected files, applications, and settings. Unlike incremental or differential backups that only capture changes since the last backup, a full backup ensures that you have a standalone version of your entire dataset. This feature makes full backups crucial for effective disaster recovery and system restoration, as it eliminates dependency on previous incremental backups.

The frequency of these backups affects both the time it takes to perform backups and the speed of data restoration. Regular full backups are particularly useful for heavily used systems or environments with high data turnover (also known as churn rate), where data changes frequently and might not be easily reconstructed from incremental backups alone.

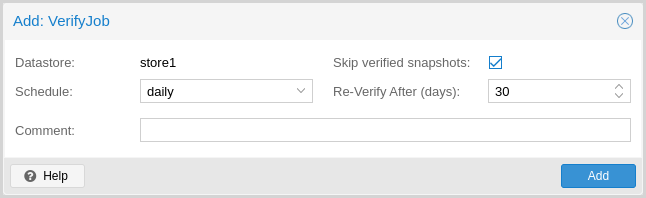

Schedule backup on Catalogic DPX

Weekly Full Backups: The Pros and Cons

Weekly full backups offer a practical solution for users who prioritize speed in recovery processes. Here are some of the main advantages and drawbacks of this approach.

Advantages of Weekly Full Backups

With a recent full backup on hand, you reduce the amount of data that needs to be processed during restoration. This is especially beneficial if your system has a high churn rate, or if rapid recovery is critical for your operations.

A weekly full backup provides more regular independent recovery points. In cases where an incremental chain might become corrupted, having a recent full backup ensures minimal data loss and faster recovery.

Weekly full backups break up long chains of incremental backups, simplifying backup management and reducing the risk of issues accumulating over extended chains.

Drawbacks of Weekly Full Backups

Weekly full backups require more storage space, as you’re capturing a complete system image more frequently. For users with limited storage capacity, this might lead to increased costs or the need for additional storage solutions.

A weekly full backup is a more intensive operation compared to daily incrementals. If performed on production servers, it may slow down performance during backup times, especially if the system lacks robust storage infrastructure.

Monthly Full Backups: Benefits and Considerations

For users who want to conserve storage and reduce system load, monthly full backups might be the ideal option. Here’s a closer look at the benefits and potential drawbacks of choosing monthly full backups.

Advantages of Monthly Full Backups

By performing a full backup just once a month, you significantly reduce storage needs. This approach is particularly useful for systems with low daily data change rates, where day-to-day changes are minimal.

Monthly full backups mean fewer instances where the system is under the heavy load of a full backup. If you’re working with limited processing power or storage, this can help maintain system performance while still achieving a comprehensive backup.

For those using paid storage solutions, reducing the number of full backups can lead to cost savings, especially if storage is based on the amount of data retained.

Drawbacks of Monthly Full Backups

In case of a restoration, relying on a monthly full backup can increase the amount of data that must be processed. If your system fails toward the end of the month, you’ll have a long chain of incremental backups to restore, which can lengthen the restoration time.

- Higher Dependency on Incremental Chains

Monthly full backups create long chains of incremental backups, meaning you’ll depend on each link in the chain for a successful recovery. Any issue with an incremental backup could compromise the entire chain, making regular health checks essential.

Since there are fewer full backups, a loss of data between the full backup and the latest incremental backup might increase the recovery point objective (RPO), meaning some data might be unrecoverable if an incident occurs.

Key Factors to Consider in Deciding Backup Frequency

To find the best backup frequency, consider these important factors:

Assess how often your data changes. A high churn rate, where large amounts of data are modified daily, typically favors more frequent full backups, as it reduces dependency on long incremental chains.

- Restore Time Objective (RTO)

How quickly do you need to restore data after a failure? Faster recovery is often achievable with weekly full backups, while monthly full backups may require more processing time to restore.

Your data retention policy will impact how much backup data you’re keeping and for how long. Frequent full backups generally require more storage, so if you’re on a strict retention schedule, you’ll need to weigh this factor accordingly.

Storage limitations can play a big role in determining backup frequency. Weekly full backups require more space, so if storage is constrained, monthly backups might be a better fit.

- Data Sensitivity and Risk Tolerance

Systems with highly sensitive or critical data may benefit from more frequent full backups to mitigate data loss risks and minimize potential downtimes.

Best Practices for Efficient Backup Management

To get the most out of your full backups, consider implementing these best practices:

- Use Synthetic Full Backups

Synthetic full backups can reduce storage costs by reusing existing backup data and creating a new “full” backup based on incrementals. This approach maintains a recent recovery point without increasing storage demands drastically.

- Run Regular Health Checks

Performing regular integrity checks on backups can help catch issues early and ensure that all data is recoverable when needed. Weekly or monthly checks, depending on system load and criticality, can provide peace of mind and prevent chain corruption from impacting your recovery.

- Review Your Backup Strategy Periodically

Data needs can change over time, so it’s important to revisit your backup frequency, retention policies, and storage usage periodically. Adjusting your approach as your data profile changes helps ensure that your backup strategy remains efficient and effective.

Catalogic: Proven Reliability in Business Continuity

For over 25 years, Catalogic has been a trusted partner in data protection and business continuity. Our backup solutions have helped countless customers maintain seamless operations, even in the face of data disruptions. By providing tailored backup strategies that prioritize both security and efficiency, we ensure that businesses can recover swiftly from any scenario.

If you’re seeking a reliable backup plan that matches your business needs, our team is here to help. Contact us to learn how we can craft a detailed backup strategy that protects your data and keeps your business running smoothly, no matter what.

Finding the Right Balance for Your Data Backup Needs

Deciding between weekly and monthly full backups depends on factors like data change rate, storage capacity, recovery requirements, and risk tolerance. For systems with high data churn or critical recovery needs, weekly full backups can offer the assurance of faster restores. On the other hand, if you’re managing data with lower volatility and need to conserve storage, monthly full backups may provide the balance you need.

Ultimately, the goal is to find a frequency that protects your data effectively while aligning with your technical and operational constraints. Regularly assess and adjust your backup strategy to keep your system secure, responsive, and prepared for the unexpected.

In today’s digital landscape, safeguarding data is paramount. The 3-2-1 backup strategy has long been a cornerstone of data protection, advocating for three copies of your data, stored on two different media types, with one copy kept offsite. This approach aims to ensure data availability and resilience against various failure scenarios. However, with the advent of cloud storage solutions, organizations are re-evaluating this traditional model, leading to a complex relationship between the 3-2-1 rule and cloud backups.

In today’s digital landscape, safeguarding data is paramount. The 3-2-1 backup strategy has long been a cornerstone of data protection, advocating for three copies of your data, stored on two different media types, with one copy kept offsite. This approach aims to ensure data availability and resilience against various failure scenarios. However, with the advent of cloud storage solutions, organizations are re-evaluating this traditional model, leading to a complex relationship between the 3-2-1 rule and cloud backups.